The conclusion was reached after researchers evaluated over 9,500 of the largest transactional websites in terms of traffic, encompassing sectors such as banking, e-commerce, and ticketing businesses.

Bad bots are plaguing the internet, making up over 30% of internet traffic today. Cybercriminals can use them to target online businesses with fraud and other types of attacks, according to the latest research from online fraud and mitigation company DataDome.

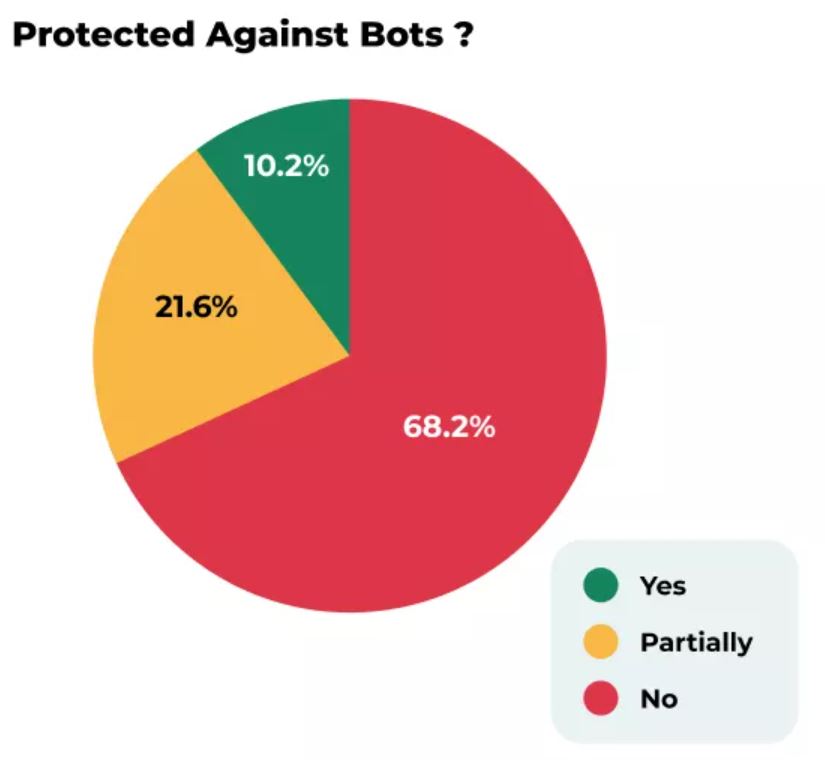

According to a report from DataDome, a company specializing in bot and online fraud protection, released on November 28, 68% of US websites lack adequate protection against simple bot attacks, highlighting how vulnerable US businesses could be to automated cyberattacks.

The researchers assessed more than 9,500 of the largest transactional websites in terms of traffic, including banking, e-commerce, and ticketing businesses. They found that the US websites face significant risks ahead of the busy holiday shopping season. The findings also highlighted that traditional CAPTCHAs aren’t effective in preventing automated attacks.

As per DataDome’s report shared with Hackread.com ahead of publication on Tuesday, 72.3% of e-commerce websites and 65.2% of classified ad websites failed the bot tests, whereas 85% of DataDome’s fake Chrome bots remained undetected.

Among the 2,587 websites featuring one CAPTCHA tool, less than 5% could detect/block all bots. Interestingly, gambling sites were the most well-protected against bot attacks, with 31% blocking all the test bots. Only 10.2% successfully blocked all malicious bot requests.

DataDome’s Head of Research, Antoine Vastel, referred to bots as “silent assassins”, adding that bots are becoming sophisticated day-by-day and US businesses are unprepared for the “financial and reputational damage” bot attacks can cause.

This, as per Vastel, includes threats like ticket scalping, inventory hoarding, and account fraud. Vastel explained that bad bots can wreak havoc on businesses and consumers, exposing them to “unnecessary risk.”

These findings highlight the urgent need for US businesses to enhance their bot protection measures. Here are 5 key points on how owners and administrators can protect their wbsites against bot attacks:

- Implement CAPTCHA and reCAPTCHA: CAPTCHAs and reCAPTCHAs are effective tools for distinguishing between humans and bots. They can be used to prevent bots from submitting forms, signing up for accounts, or accessing restricted areas of your website.

- Use IP blacklisting: IP blacklisting involves identifying and blocking IP addresses that are known to be associated with malicious activity. This can help to prevent bots from accessing your website altogether.

- Monitor website traffic: By monitoring website traffic, you can identify patterns that may be indicative of bot activity. For example, if you see a sudden surge in traffic from a single IP address, this could be a sign that your website is under attack from a botnet.

- Implement rate limiting: Rate limiting is a technique that can be used to limit the number of requests that a user can make to your website in a given period of time. This can help to prevent bots from overwhelming your website with requests.

- Use web application firewalls (WAFs): WAFs are designed to protect websites from a variety of attacks, including bot attacks. They can be used to block malicious traffic and prevent bots from exploiting vulnerabilities in your website’s code.

RELATED ARTICLES

- IBM X-Force Discovers Gootloader Malware Variant- GootBot

- Qakbot Botnet Disrupted, Infected 700,000 Computers Globally

- Telekopye Toolkit Used as Telegram Bot to Scam Marketplace Users

- Proton CAPTCHA: New Privacy-First CAPTCHA Defense Against Bots

- Google Workspace Exposed to Takeover from Domain-Wide Delegation Flaw