Deepfake technology poses a significant threat to the upcoming US elections as it is not just a technological challenge but a manifestation of a broader deception mechanism, explained Check Point Research (CPR).

In its latest report, cybersecurity researchers at Check Point Research team highlighted the dangers posed by the widespread availability of artificial intelligence-based technologies, particularly deepfake, in encouraging electoral fraud.

Researchers cited deepfake technology as a significant threat to the authenticity of the electoral process due to features like voice cloning that can manipulate voters and sabotage the entire process. The technology is cheap, accessible to anyone with an internet connection, and untraceable, which hinders election security given the ongoing challenges in legislating against these technologies.

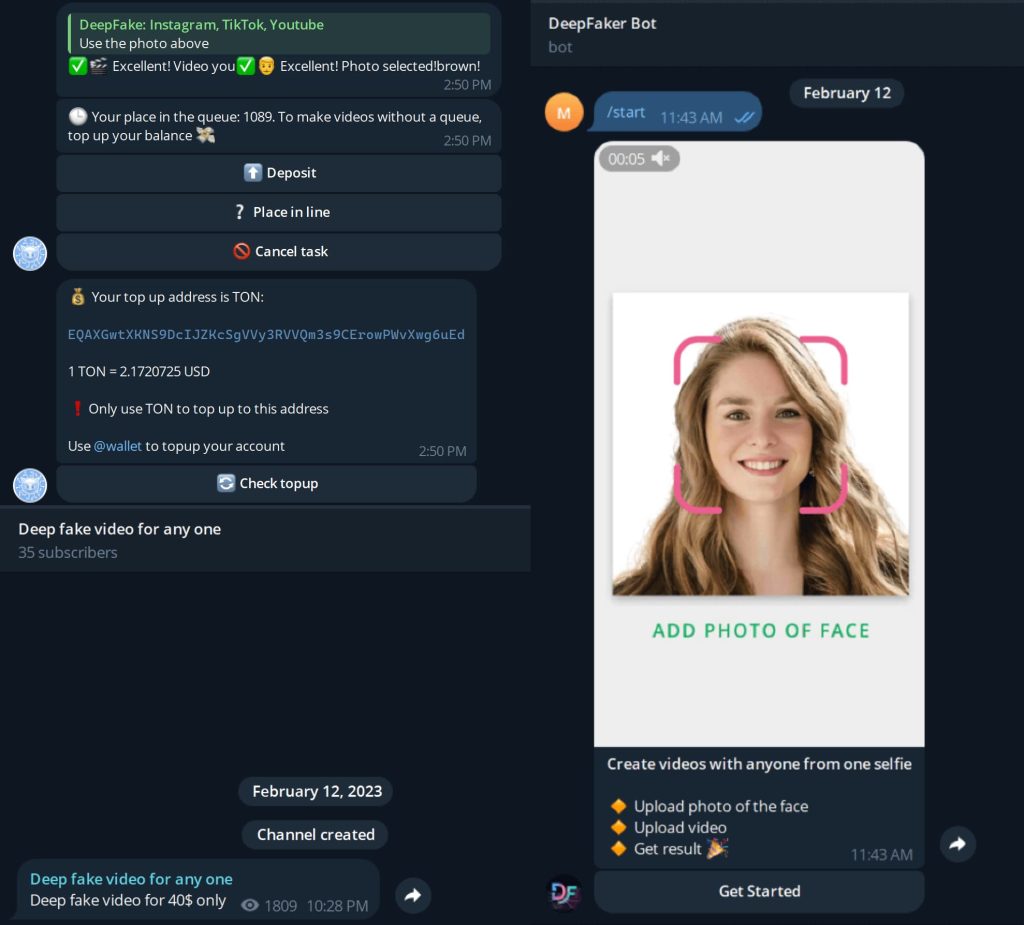

Deepfake technology allows users to create “fabricated audiovisual content,” which poses a threat to public opinion and democratic institutions, researchers noted. With over 3,000 repositories on GitHub and hundreds of channels on Telegram (at least 400-500), deepfake services range from automated bots to personalized services. Pricing varies from $2 per video to $100 for multiple attempts, making it affordable to commission deceptive content.

Recent incidents of damage caused by deepfake technology highlight its growing threat. In February 2024, an Asian company fell victim to scammers who impersonated the company’s CFO during an online meeting, resulting in a loss of over $25 million.

On platforms like YouTube, deepfake technology is being exploited by scammers to perpetrate crypto scams. Verified channels have been targeted to steal millions of dollars worth of crypto, with deepfakes of prominent figures such as Elon Musk, Bill Gates, Ripple’s CEO Brad Garlinghouse, Michael J. Saylor, and others being used to deceive unsuspecting viewers.

In June 2023, deepfake technology was utilized to disseminate fabricated video messages from President Putin. Similarly, in 2022, a deepfake video of Ukrainian President Zelensky went viral, urging Ukrainians to surrender.

Despite efforts to combat deepfakes, such as the launch of McAfee’s tool MockingBird, which boasts a 90% accuracy rate in detecting malicious content, the potential damage from deepfake technology remains considerable and, in many cases, irreversible.

“The potential for election fraud through the adept use of artificial intelligence and deepfake technologies, orchestrated by a clandestine network operating from the shadows, leaving no digital fingerprints” Check Point Research Team wrote in the blog post published on 22 February 2024.

Researchers noted that an underground network of actors can manipulate public perception and undermine democratic integrity through deepfakes. The anonymity of this technology makes it difficult to hold anyone accountable. Traditional cybersecurity measures are insufficient as the threat lies in the entire ecosystem of deception it enables, including bots, fake accounts, and anonymized services.

Voice cloning, a subset of deepfake technology that uses machine learning and artificial intelligence to replicate a person’s voice with remarkable accuracy, is particularly effective in spreading misinformation. It allows the creation of convincing fake audio clips and is particularly effective in spreading misinformation, as incidents involving robocalls with fabricated messages from political leaders have already been observed.

For instance, a robocall featuring fake US President Joe Biden voice warning New Hampshire voters not to cast their ballots would cost from $10 to several hundred dollars depending on the level of accuracy and technological efficacy required.

To protect democratic processes from deepfakes, a multifaceted approach involving legislative measures, public awareness, technological solutions, and international cooperation is needed, including enhanced digital literacy and collaboration between technology companies and law enforcement, researchers concluded.