The National Institute of Standards and Technology (NIST) has exposed critical AI vulnerabilities that can be exploited by threat actors to create potential avenues for compromising AI systems.

In a comprehensive study, researchers at the National Institute of Standards and Technology (NIST) dug into the vulnerabilities in artificial intelligence (AI) systems, revealing the exploitation of untrustworthy data by adversaries and malicious threat actors.

Despite concerns, Artificial Intelligence has become a vital player in our lives. From detecting dark web cyber attacks to diagnosing critical medical conditions when doctors fall short, it is safe to say that AI is here to stay. However, it requires strong cybersecurity measures to prevent it from falling into the hands of malicious threat actors.

Therefore, the research, titled “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations,” can be termed a significant contribution to the ongoing effort to establish trustworthy AI.

AI Vulnerabilities Exposed: No Foolproof Defense

NIST’s computer scientists have discussed the inherent risks associated with AI systems, revealing that attackers can intentionally manipulate or “poison” these systems to cause malfunction.

The publication emphasizes the absence of a foolproof defence mechanism against such adversarial attacks, urging developers and users to exercise caution amidst claims of impenetrable safeguards.

AI in the Crosshairs: Understanding Attacks and Mitigations

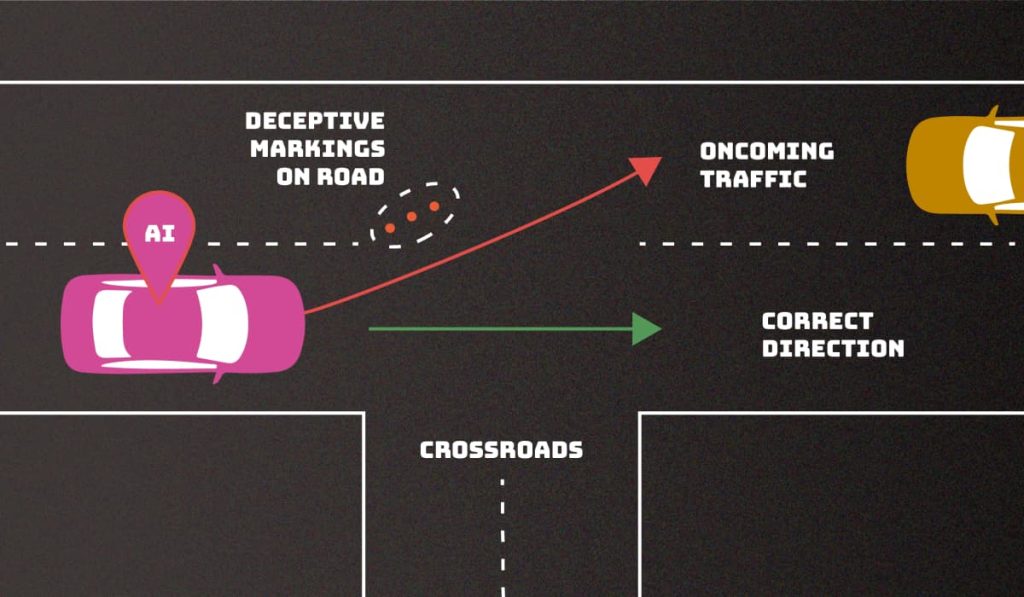

The study classifies major attacks into four categories: evasion, poisoning, privacy, and abuse. Evasion attacks aim to alter inputs post-deployment, while poisoning attacks introduce corrupted data during the training phase.

Privacy attacks occur during deployment, seeking to extract sensitive information for misuse, and abuse attacks involve inserting incorrect information into AI sources.

Challenges and Mitigations:

The research acknowledges the challenges in safeguarding AI from misdirection, particularly due to the massive datasets used in training, beyond the scope of human monitoring. NIST provides an overview of potential attacks and corresponding mitigation strategies, emphasizing the community’s need to enhance existing defences.

AI’s Vulnerability Spotlight: The Human Element

Highlighting real-world scenarios, the study explores how adversarial actors can exploit vulnerabilities in AI, resulting in undesirable behaviours. Chatbots, for instance, may respond with abusive language when manipulated by carefully crafted prompts, exposing the vulnerability of AI in handling diverse inputs. For the complete research material behind this study, visit here (PDF).

For insights into NIST’s latest study, we reached out to Joseph Thacker, principal AI engineer and security researcher at AppOmni, a SaaS security pioneer. Joseph told Hackread.com that NIST’s study is “the best AI security publication he has seen so far.

“This is the best AI security publication I’ve seen. What’s most noteworthy are the depth and coverage. It’s the most in-depth content about adversarial attacks on AI systems that I’ve encountered. It covers the different forms of prompt injection, elaborating and giving terminology for components that previously weren’t well-labelled,” noted Joseph.

“It even references prolific real-world examples like the DAN (Do Anything Now) jailbreak and some amazing indirect prompt injection work. It includes multiple sections covering potential mitigations but is clear about it not being a solved problem yet,” he added.

“There’s a helpful glossary at the end, which I personally plan to use as extra “context” to large language models when writing or researching AI security. It will make sure the LLM and I are working with the same definitions specific to this subject domain. Overall, I believe this is the most successful over-arching piece of content covering AI security,” Joseph emphasised.

Developers’ Call to Action: Enhancing AI Defenses

NIST encourages the developer community to assess and improve existing defences against adversarial attacks critically. The study, a collaborative effort between government, academia, and industry, provides a taxonomy of attacks and mitigations, recognizing the evolving nature of AI threats and the imperative to adapt defences accordingly.

RELATED ARTICLES

- Malicious Abrax666 AI Chatbot Exposed as Potential Scam

- Dark Web Pedophiles Using Open-Source AI to Generate CSAM

- AI Model Listens to Typing, Potentially Compromising Sensitive Data

- AI-powered Customer Communication Platforms for Contact Centers

- Deepfakes Are Being Used to Circumvent Facial Recognition Systems