Patience is no longer a virtue when talking about website or app performance. Users get frustrated after waiting for 16 seconds for a web page to load. And most of the time, these websites and apps have a database to store information. And if your job is a MySQL developer, you must take MySQL performance tuning seriously.

Many websites use MySQL. For instance, in 2022, WordPress is used by 43.2% of all websites on the internet. And guess what database powers WordPress? It’s MySQL.

WordPress is also the tool I used to write this article. So, I rely on MySQL to store every text and image you see here.

The load time is so vital that it can make or break a sale or a company’s reputation. In 2022, you need to aim for 1-4 seconds load time for a web page, according to one study.

Yet, performance tuning is a big topic. CPU, RAM, and disk are top priorities for hardware. Then, there’s also network bandwidth and MySQL configuration. But no matter how fast your hardware is, the database itself and the queries you form can slow it down.

This article will cover a primer on MySQL database and query performance tuning. It’s a primer because optimization is one big chapter in the official manual.

So, if you’re coding SQL for a while, you can level up as a developer if you optimize your queries religiously.

And what’s in it for you and me if we take MySQL performance tuning as a goal?

The Key Benefits of MySQL Performance Tuning

Three words: Everybody is happy!

Imagine a very responsive app. Each piece of information is retrieved and displayed in a snap. Saving changes aren’t far behind either. The experience is so smooth. You can do more in less time.

That’s the dream, not just for us but for the users as well.

And if this dream comes true, your users are happy. Your boss, your team, everybody can go home early.

Then, imagine an app with the features the users want. But each page completely displays after 10 or more seconds. More than 20 years ago, users may be more forgiving. But not today.

If this happens, you and your team will spend a lot of time optimizing after the fact. And it’s harder this time. Because you must do this together with fixing problems and adding features.

Of course, we can only plan and do so much. We can’t account for everything. But there are things you can do at the beginning so it won’t be painfully hard when you release.

The Top 5 Tips for MySQL Performance Tuning (With Examples)

In this article, the MySQL version used is 8.0.30 installed on Ubuntu 22.04. And the GUI tool used is dbForge Studio 2022 for MySQL v9.1.21 Enterprise Edition.

Rest assured this won’t be another article with vague ideas. Examples will be provided. And images will be plentiful to support the ideas. And this is something that you can do, as you will see.

1. Start with a Good Database Design

Obvious? Pretty much.

Your database design will dictate how hard it will be for you to maintain your database.

Have you inherited a system with a huge MySQL database? You probably hate it when you see fat tables with hundreds of columns. These tables also receive frequent updates. And their data types? The fat ones too like BIGINT, VARCHAR(4000), and more. But the data stored are not even half of it. And the overall design? It’s half normalized the database is not even normal. So, the performance was also expected.

If you saw Disney’s Zootopia, you probably met Flash the sloth and his pals. Everything in them is so delayed. They even laugh at funny jokes seconds later. Can you relate to Judy Hopps’ frustration? That’s how it feels to use a slooowww system.

Knowing the headaches of your huge ‘inheritance’ teaches you not to do the same in the future. So, aside from normalizing (and denormalizing) databases, here’s what you need to do.

Use the Right Data Types and Sizes

Common columns can be strings, numbers, and dates. But there are various string data types. There are also a bunch of numeric types and data types.

So, which data type to use? Is it CHAR or VARCHAR? Is it INT or SMALLINT?

Of course, different data types have different characteristics and limits. When you use a smaller type, like SMALLINT or TINYINT, and you hit the limit, an error will occur. So, just use the big types like BIGINT?

Not so fast.

Column Data Type and Sizes

Each data types have storage requirements. And if you use the bigger ones, you consume more disk space and RAM. This hampers the speed of your queries. So, if you only need 1 to 100,000 numbers in a column, a MEDIUMINT is much preferred over a BIGINT. BIGINT consumes 8 bytes while MEDIUMINT is 3 bytes.

The same concept applies to other data types as well. So, the rule of thumb here is to use the smallest possible data type for a column.

Row Formats

Another consideration is the row format of tables. MySQL has different row formats to choose from. The default is DYNAMIC. For the least space, use the COMPRESSED row format.

And speaking of row formats and table rows, think of it as a line in a page of a notebook. Because MySQL arranges tables into tablespaces. And tablespaces contain pages. Each page has the same size, just like a page in a real notebook. In MySQL, the default page size is 64KB. MySQL will allocate rows on a page. And if a row doesn’t fit a page, the variable-length columns are likely relocated to overflow pages. So, when this happens, querying a row or rows with columns in the overflow pages increases I/O. And it slows your query.

In other words, performance decreases if data in one or more rows will not fit a page.

Use the Right Indexes

Without table indexes, MySQL will search your tables row by row until it finds a match. With indexes, MySQL uses index keys to find matches quickly.

It’s best to use indexes to columns used as foreign keys. And also, columns used in WHERE, JOIN, and GROUP BY. But you can’t just create indexes. Unnecessary indexes can also reduce performance on INSERT, UPDATE, and DELETE operations. So, strike a balance in using indexes.

You will see a performance comparison of tables with and without indexes later.

Consider the MEMORY Storage Engine for Non-Critical Data

Do you have tables that you access frequently but rarely update it? Then, consider using the MEMORY storage engine. Unlike InnoDB, MEMORY tables use RAM to store data. So, in certain scenarios, accessing data from here is faster.

But there’s a catch.

When MySQL crashes or the service restarts, you lose the tables. So, use this for temporary work areas or read-only scenarios. And make sure the data volume can fit in RAM.

Test to confirm if your candidate tables perform better than InnoDB equivalents.

2. Spot Slow Queries with These

You need to find out what queries are slow during work hours. Rather than guessing, there’s an intelligent way to spot them.

There are 2 ways to do it in MySQL.

Use the Slow Query Log

One way to spot slow queries is to enable the slow query log in mysqld.cnf.

You can locate this file in a Linux system in /etc/mysql/mysql.conf.d/. To open and edit the file, open a Terminal window, and issue the following command:

sudo nano /etc/mysql/mysql.conf.d/mysqld.cnf |

Type the correct password for root. And the editor will open. Look for the following entries in your own copy of mysqld.cnf.

. . .#slow_query_log = 1#slow_query_log_file = /var/log/mysql/mysql-slow.log#long_query_time = 2#log-queries-not-using-indexes. . . |

You need to uncomment these entries. To uncomment, remove the pound(#) symbol. If you didn’t find them in the file, you need to add them manually. Here’s mine.

Figure 1. Configuring the Slow Query Log.

Here’s what each entry means:

- slow_query_log – set this to 1 to enable slow query log.

- slow_query_log_file – the full path and filename where the slow query log is.

- long_query_time – defaults to 2 seconds. Any query that ran more than this will be logged.

- log-queries-not-using-indexes – not required for the slow query log to function. But it’s good for spotting queries that didn’t utilize an index.

After editing mysqld.cnf, restart the MySQL service using the Terminal. Run the following:

sudo systemctl restart mysql |

Then, you need to test.

I tried importing some tables from SQL Server to MySQL for testing. I chose the AdventureWorks sample database. And I used dbForge Studio import tool to do that.

And then, I ran this statement:

UPDATE `sales.salesorderdetail` sodINNER JOIN `sales.salesorderheader` soh ON sod.SalesOrderID = soh.SalesOrderIDSET sod.UnitPrice = 1459WHERE sod.ProductID = 758AND soh.OrderDate=’2012-04-30′; |

This ran for almost 17 seconds. To see if it logs alright, I did the following in the Terminal:

sudo nano /var/log/mysql/mysql-slow.log |

And here’s what I found:

Figure 2. Spotting slow queries in the Slow Query Log.

See the portion inside the green box? It ran in 16.82 seconds. The query is there. Now all you need to do is to fix the problem. Later, we will find out the reasons why it’s slow by profiling the query.

But There’s a Catch to This Method

Using the Slow Query Log to spot slow queries during MySQL performance tuning is a real help.

But the catch is you need to restart the MySQL service if it’s not yet enabled. The list can also be so long that it’s hard to find what you’re looking for. Lastly, the statement needs to finish running before it is logged.

But there’s another way.

Using Performance Schema Statement Event Tables

In MySQL, you will find a database called performance_schema. As the name suggests, you can find the performance data of your MySQL server. It logs events that take time. And for queries, it logs the statement and the time it took to execute it.

There are many tables there. But in particular, we are interested in the events_statements_current and the events_statements_history.

- events_statements_current – contains the current status of the thread’s most recent statement events.

- events_statements_history –contains the N most recent statement events that have ended per thread. The value of N depends on the performance_schema_events_statements_history_size system variable. I have 10 as a value of N in my machine. The Performance Schema auto sizes this value. Values on this table are derived from events_statements_current. Rows are added when a statement has ended execution.

If you find these tables empty, the statement event collection might be disabled. Yet by default, they should be enabled.

Configuring Statement Events

You need 2 tables to check if event collection is enabled.

- setup_instruments – contains instruments with names that begin with ‘statement‘. These are individual statement event classes like a SELECT, UPDATE, or more. The one you need should be enabled.

- setup_consumers – contains consumer values with the names of the statement event tables. The events_statements_xxx tables you need should be enabled.

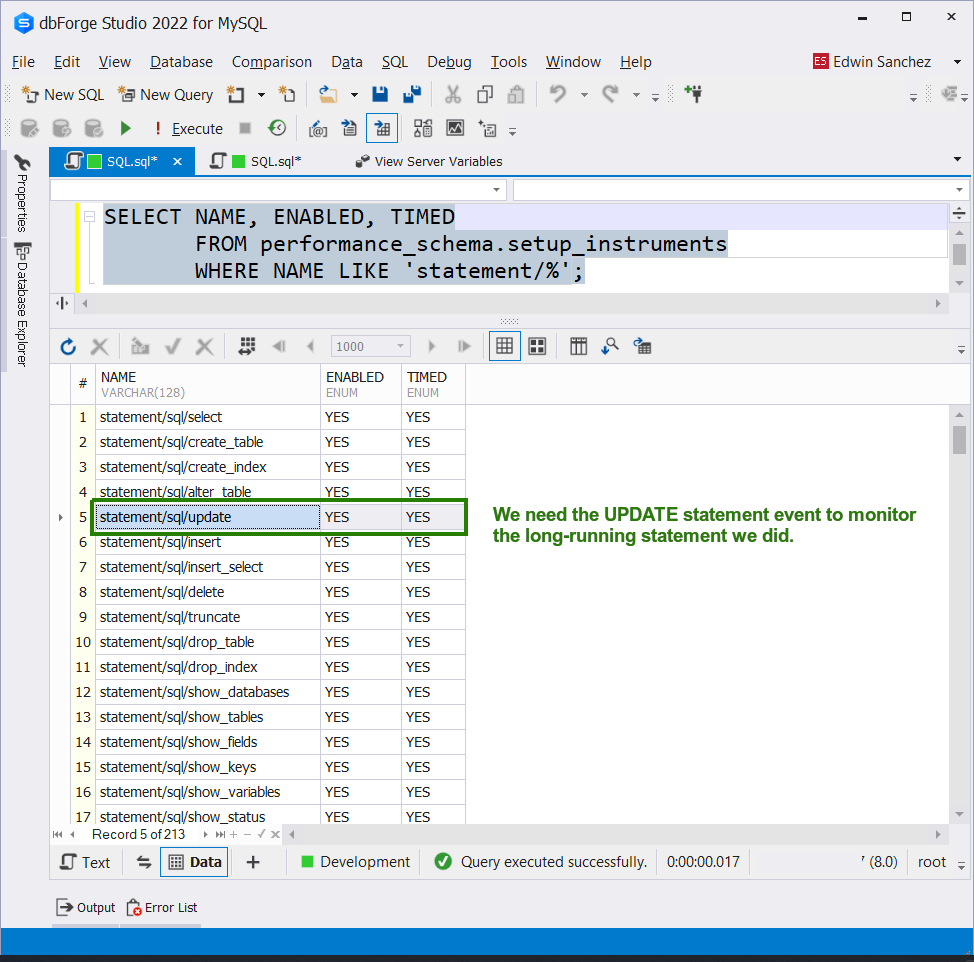

Here’s how to check for the setup_instruments.

SELECT NAME, ENABLED, TIMED FROM performance_schema.setup_instruments WHERE NAME LIKE ‘statement/%’; |

Then, you should see YES for ENABLED and TIMED columns for the UPDATE statement event. Here’s what I have in my MySQL server:

Figure 3. Checking the Setup Instruments configuration.

If the values are NO, update the setup_instruments table.

Then, check for the setup_consumers:

SELECT * FROM performance_schema.setup_consumers WHERE NAME LIKE ‘%statements%’; |

Check out the screenshot below.

Figure 4. Checking the Setup Consumers configuration.

NOTE: If you can’t find long-running queries in the 2 tables, you also need to enable the events_statements_history_long. Then, look for the query in question in that table. This is not enabled by default as seen above.

Checking the Slow Queries

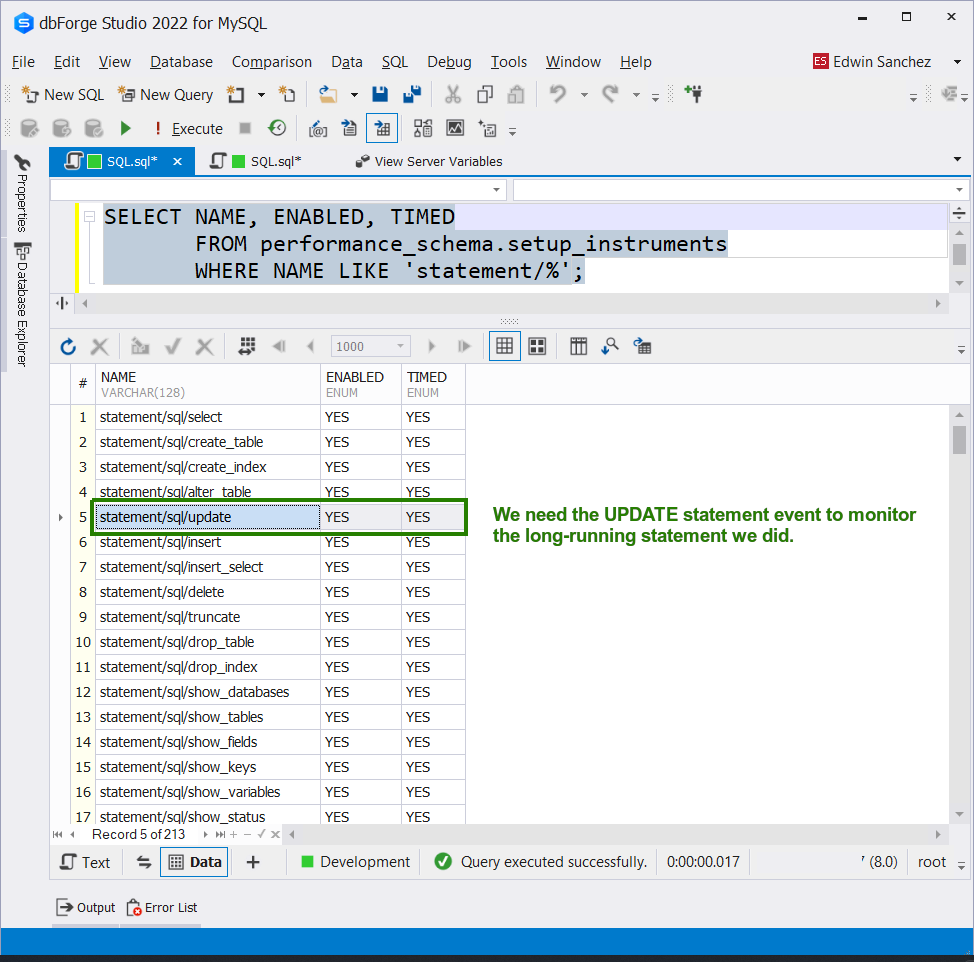

If configurations are good, you can now start looking for slow queries. Here’s how:

SELECT esh.EVENT_ID ,esh.EVENT_NAME,esh.TIMER_WAIT,esh.SQL_TEXT,esh.CURRENT_SCHEMAFROM performance_schema.events_statements_history eshWHERE esh.EVENT_NAME LIKE ‘%update%’; |

The above uses the events_statements_history because the query execution is already finished. There can be more in that table. So, we are looking specifically for the UPDATE statement we did earlier. Here’s a screenshot.

Figure 5. Searching for the slow query in events_statements_history table.

Note the TIMER_WAIT. That’s how long the query ran in picoseconds. The second time I ran it took almost 16 seconds. You won’t see the SQL_TEXT clearly. So, you need to right-click it. And select Data Viewer. Then, you will see the whole statement.

That’s how to spot slow queries. Next, we need to know why they are slow.

3. Use Query Profiling to Know Why Your Query is Slow

After knowing what queries are so slow, you need to profile these queries. Without query profiling, you won’t know why a query is slow. And if you don’t know the reason, you can’t fix them.

Or maybe rely on your hunches and guesses. But that’s not smart.

MySQL offers different ways to profile your query. One is using the statement events tables you saw earlier. Another is using EXPLAIN.

You can use any MySQL tool to query the statement events table and run MySQL EXPLAIN. Or use dbForge Studio for MySQL’s Query Profiling Mode.

To enable Query Profiling Mode, click the corresponding button in the toolbar. See Figure 6 below.

Figure 6. Enabling the Query Profiler Mode in dbForge Studio for MySQL

What Problem Areas to Look For in the Query Profile

1. Table or Index Scan

This is a row-by-row scan of a table or index to get the needed rows. For a large table, this is very expensive. A missing index is the most common culprit for a table scan.

This is not always bad, though. If the table is tiny, like 50 rows or less, this is fine. Sometimes better than using an index. And if the intention of the query is all rows, then the query optimizer will likely use a table or index scan.

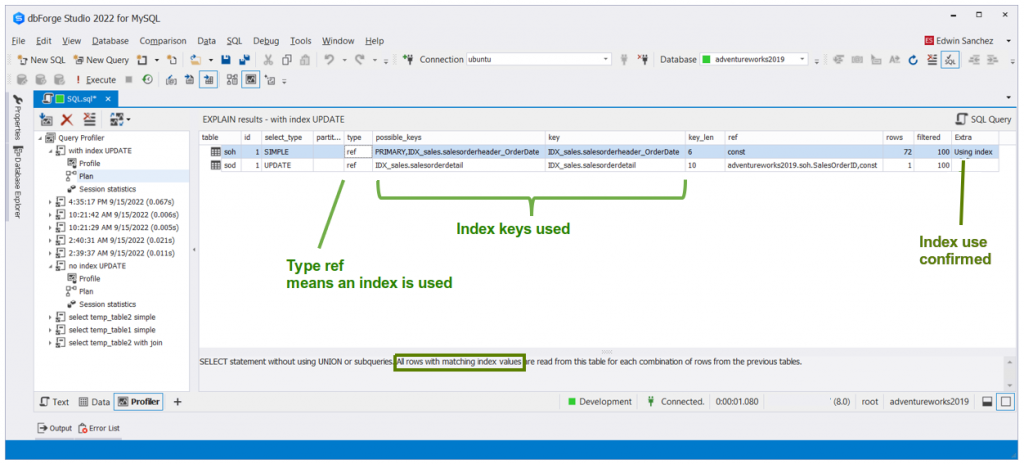

Here’s an example of a table scan in the EXPLAIN results in dbForge Studio.

Figure 7. The EXPLAIN results show a table scan for 2 tables.

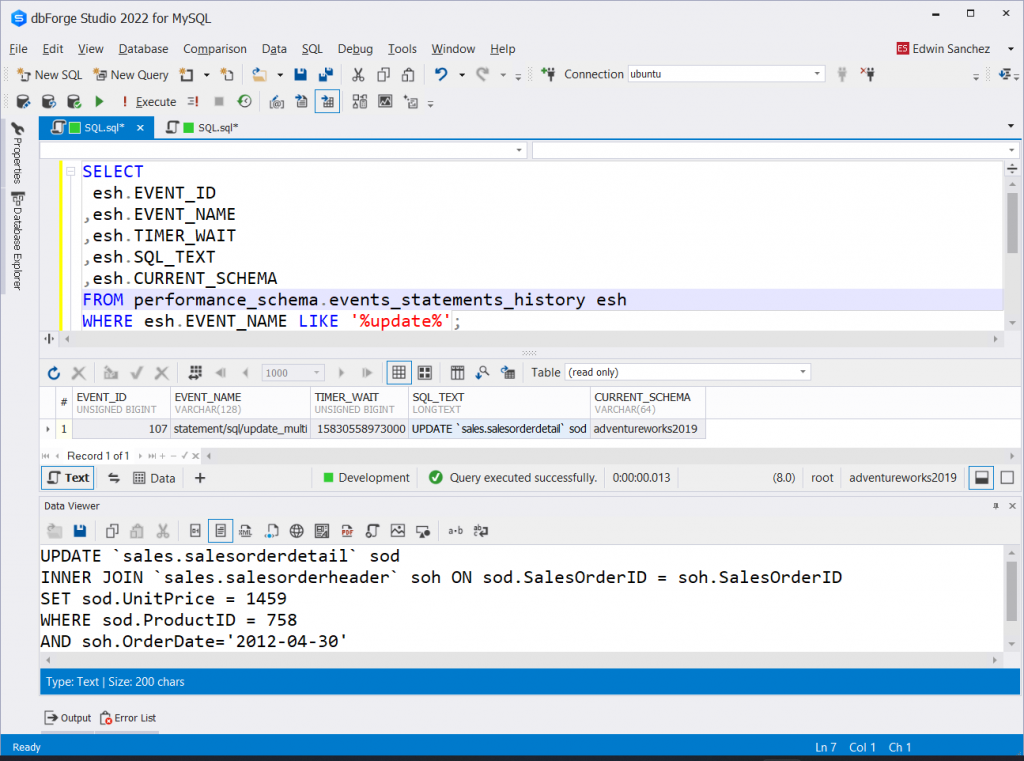

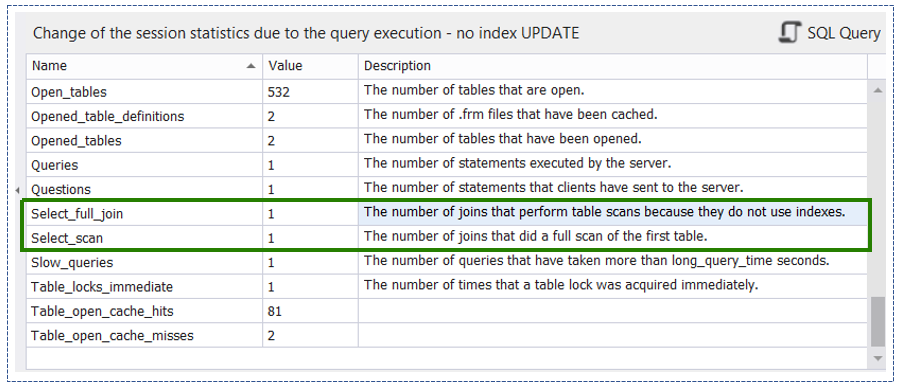

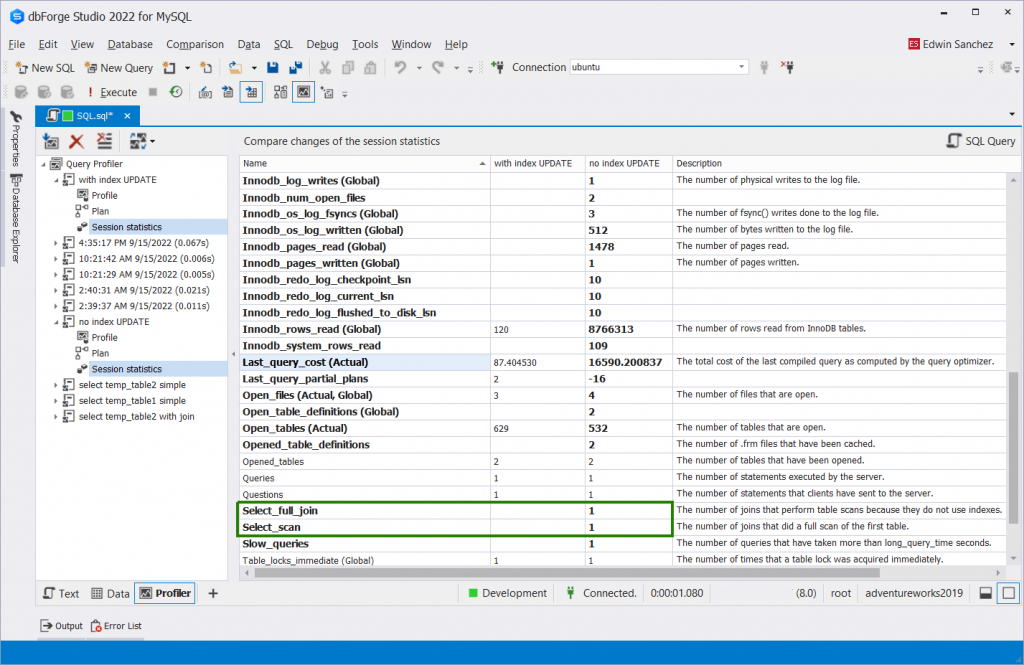

Session Statistics is another area where table scan is reported. See a sample below.

Figure 8. Session Statistics in dbForge Studio for MySQL showing a table scan occurs.

The presence of a Select_full_join and Select_scan with a value tells that your query had a table scan.

Using the SQL editor in dbForge Studio, use EXPLAIN ANALYZE on the statement to reveal a table scan, if any. Check a sample below.

Figure 9. EXPLAIN ANALYZE results showing table scans.

So, there are 3 ways shown here to find clues about table scans.

2. Number of Rows

Related to the table and index scan is the number of rows. If what is being scanned is too large but the result is few, this is a red flag. A common culprit is a missing index for a table scan.

Looking at Figures 7 to 9 about table scans show a large number of rows scanned.

3. Sorting

Sorting is another expensive operation in query execution. An ORDER BY clause in your SELECT statement will trigger sorting. Do you see that your query runs fast without ORDER BY but slows down when you add it? Then, consider simplifying your query and add applicable indexes for ORDER BY.

4. Index Not Used or No Good Index Used

There are other details not found in the Session Statistics and EXPLAIN results. But you can find them in the Events Statements tables.

In the Events Statement tables, there are columns NO_INDEX_USED and NO_GOOD_INDEX_USED. If the value for either column is 1, the index was not used even if there’s one.

To see that it happened in your query, use the events_statements_xxxx table. The query in question exists there. See a sample below specific for these 2 columns:

SELECT eshl.EVENT_ID, eshl.END_EVENT_ID, eshl.EVENT_NAME, eshl.SOURCE, eshl.TIMER_WAIT, eshl.LOCK_TIME, eshl.SQL_TEXT, eshl.NO_INDEX_USED, eshl.NO_GOOD_INDEX_USEDFROM performance_schema.events_statements_history_long eshlWHERE eshl.SQL_TEXT LIKE ‘%INNER JOIN sakila.film_actor fa ON a.actor_id = fa.actor_id%’and eshl.EVENT_ID=1288; |

You have to supply the right values for the WHERE clause. Initially, query without the EVENT_ID. Use a portion of the query in question for the SQL_TEXT. Then, search for the list of results and find the right EVENT_ID.

Here’s a screenshot of the result:

Figure 10. Events statements history showing a query with no index used.

The dbForge Studio Session Statistics and EXPLAIN results will complement your findings here.

5. Internal Temporary Tables

Complex queries may trigger the creation of internal temporary tables in MySQL. The use of derived tables, common table expressions, and others are some of the reasons. To minimize these, simplify the query.

The Extra column in EXPLAIN results may include this information. But you can also find this in the events_statements_xxxx tables using the columns CREATED_TMP_TABLES and CREATED_TMP_DISK_TABLES.

Here’s a sample query that will use an internal temporary table.

SELECT f.title AS film_title,CONCAT(a.last_name, ‘, ‘,a.first_name) AS actor_name,f.release_year,c.name AS categoryFROM sakila.actor aINNER JOIN sakila.film_actor fa ON a.actor_id = fa.actor_idINNER JOIN sakila.film f ON fa.film_id = f.film_idINNER JOIN sakila.film_category fc ON f.film_id = fc.film_idINNER JOIN sakila.category c ON fc.category_id = c.category_idWHERE c.category_id = 5ORDER BY actor_name; |

Then, check the EXPLAIN results below.

Figure 11. Use of internal temporary table shown in EXPLAIN results.

4. Fix the Problem Based on Findings

Let’s have the same slow query and fix it.

UPDATE `sales.salesorderdetail` sodINNER JOIN `sales.salesorderheader` soh ON sod.SalesOrderID = soh.SalesOrderIDSET sod.UnitPrice = 1459WHERE sod.ProductID = 758AND soh.OrderDate=’2012-04-30′; |

This is the same query from the Slow Query Log earlier (Figure 1).

We already know from Figures 7 and 8 that this query used table scans for the 2 tables used. The Select_full_join value of 1 in the Session Statistics shows that it didn’t use an index. Checking the tables from the Object Explorer in dbForge Studio shows that both tables have no index.

The dbForge Studio Import from SQL Server did not include the indexes and primary keys. Only the table structure and data. So, to fix this problem, create the indexes.

ALTER TABLE adventureworks2019.`sales.salesorderheader`ADD PRIMARY KEY(SalesOrderID); ALTER TABLE adventureworks2019.`sales.salesorderheader`ADD INDEX `IDX_sales.salesorderheader_OrderDate` (OrderDate); ALTER TABLE adventureworks2019.`sales.salesorderdetail`ADD PRIMARY KEY(SalesOrderDetailID); ALTER TABLE adventureworks2019.`sales.salesorderdetail`ADD INDEX `IDX_sales.salesorderdetail` (SalesOrderID, ProductID); |

Then, repeat what you did in the Query Profiler to see if things improved.

In this example, it certainly did improve. See how much execution time was cut by comparing the new with the old profile.

Figure 12. Execution time improvements after indexing.

To do the comparison in the Query Profiler, hold the CTRL key then click both profiles as seen in Figure 12.

Is the table scan gone? Let’s check the EXPLAIN results (Figure 13) and the Session Statistics (Figure 14).

Figure 13. EXPLAIN results confirm the use of an index. The table scan is gone.

Figure 14. Session Statistics show reduced or blank values after indexing. Select_scan and Select_full_join included.

This proves that indexing tables increase query performance. And that’s how to do query profiling.

To know more about interpreting EXPLAIN results, check out the official documentation.

5. Avoid These Code Smells to Improve MySQL Performance Tuning

You already learn how to work on columns and rows in your table. And you learn how to spot slow queries. Then, you learn how to profile your queries to zero in on the problems. But you will soon create more SQL queries and scripts.

So, try to avoid these common code smells.

SELECT *

How many times have you heard or read about avoiding SELECT *? This time, let’s prove why you need to avoid this in production code.

Here’s a sample SELECT *.

SELECT* FROM sakila.actor aINNER JOIN sakila.film_actor fa ON a.actor_id = fa.actor_idINNER JOIN sakila.film f ON fa.film_id = f.film_idINNER JOIN sakila.film_category fc ON f.film_id = fc.film_idINNER JOIN sakila.category c ON fc.category_id = c.category_idWHERE c.category_id = 5; |

And let’s compare it to this:

SELECT f.title AS film_title,CONCAT(a.last_name, ‘, ‘,a.first_name) AS actor_name,f.release_year,c.name AS categoryFROM sakila.actor aINNER JOIN sakila.film_actor fa ON a.actor_id = fa.actor_idINNER JOIN sakila.film f ON fa.film_id = f.film_idINNER JOIN sakila.film_category fc ON f.film_id = fc.film_idINNER JOIN sakila.category c ON fc.category_id = c.category_idWHERE c.category_id = 5 |

Let’s see if SELECT <column list> will win.

Figure 15. Using SELECT * is slower as seen in this query profile comparison.

The EXPLAIN results of both are almost identical. The indexes and keys used are the same.

Meanwhile, the Session Statistics reveal more.

Figure 16. SELECT * vs SELECT <column list>. The logical reads are higher with SELECT * compared to using a column list.

Figure 16 highlights the buffer pool read requests or logical reads. Using SELECT * has more logical reads meaning it is more expensive. Since this is a global variable, you need to use this metric in a separate database where only you have a session.

So, this proves why SELECT * is not advisable to use in production code.

Having Functions in WHERE Clause

This is another common mistake. Consider the example below.

SELECT c.last_name,c.first_name,p.payment_date,p.amount FROM sakila.payment pINNER JOIN sakila.customer c ON p.customer_id = c.customer_idWHERE year(p.payment_date) = 2005AND MONTH(p.payment_date) = 8; |

It uses the YEAR and MONTH functions in the WHERE clause

Here’s a better version:

SELECT c.last_name,c.first_name,p.payment_date,p.amount FROM sakila.payment pINNER JOIN sakila.customer c ON p.customer_id = c.customer_idWHERE p.payment_date BETWEEN ‘2005-08-01’ AND ‘2005-08-31’ |

Let’s have the Session Statistics of these 2 queries.

Figure 17. Query profile comparison using logical reads.

From the logical reads alone, you can see that using functions in the WHERE clause is a bad idea.

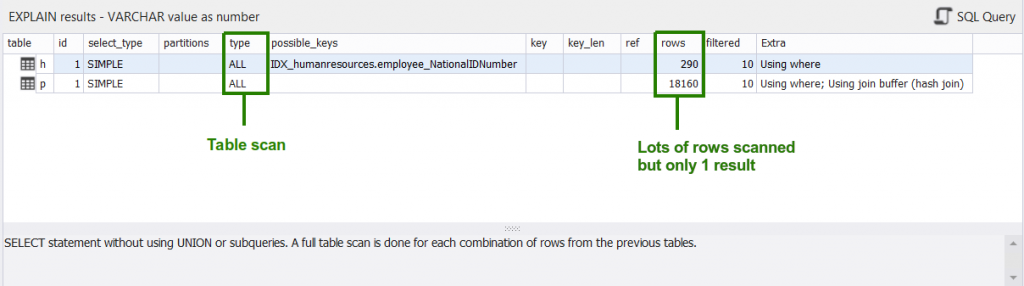

Implicit Conversion

Sometimes you define a column as VARCHAR even though the value is a number. Columns like an ID number or National ID are numbers but you don’t need to do calculations with them. So, if you use it in a query, what happens when you don’t enclose values in quotes?

MySQL will convert the number to VARCHAR automatically. That’s implicit conversion. Then, a table scan will result.

Here’s an example. The NationalID column is VARCHAR. But the query below uses a numeric value.

SELECT h.BusinessEntityID, h.JobTitle, h.BirthDate, h.MaritalStatus, h.Gender, h.HireDate, p.LastName, p.FirstNameFROM adventureworks2019.`humanresources.employee` hINNER JOIN adventureworks2019.`person.person` p ON h.BusinessEntityID = p.BusinessEntityIDWHERE h.NationalIDNumber = 519899904; |

Here are the EXPLAIN results in Figure 18.

Figure 18. A table scan results from the implicit conversion of values in a WHERE clause.

But if you enclose the NationalID value with quotes, the index will be used.

Use of LIKE and Wildcards

Lastly, using LIKE with wildcards in a WHERE clause will also result in a table or index scan.

Here’s an example:

SELECT * FROM adventureworks2019.`person.person` pWHERE p.LastName LIKE ‘rob%’; |

You can add a compound index to LastName and FirstName columns if you experience a table scan. And see if the results improve. Consider also if this is really needed by the calling app and adjust the design accordingly.

Takeaways

We cover some of the basic MySQL performance tuning. And to recap, you need to:

- Implement good database design with the right data types, index, and row formats.

- The number and size of columns should also fit on a page to avoid storing to overflow pages.

- Spot slow queries with Slow Query Log and the Events Statements tables

- Know why your query is slow by profiling them.

- Fix the problem and profile the query again until query performance is acceptable.

- Avoid code smells like SELECT *, functions in WHERE clause, and more.

It is also good to have a tool to help you in MySQL performance tuning.

dbForge Studio for MySQL is a prime tool for developers. It reduces development time by having Query Profiling tools built in. MySQL performance tuning has never been this good. Why not try it for free? Download dbForge Studio for MySQL today! And experience the difference.

RELATED POSTS

- Magento 1 vs Magento 2

- Product Review – Stellar Repair for MS SQL

- How to repair suspect database in SQL Server

- How to Optimize Your Database Storage in MySQL

- 5 Common Database Management Challenges & How to Solve Them